Table of Contents

- 1 What is a Ruby global method cache?

- 2 Why would we care?

- 3 What invalidates a Ruby global method cache?

- 4 How can I check if what I do invalidates global method cache?

- 5 Benchmarking methodology

- 6 Method cache invalidation for single threaded applications

- 7 Method cache invalidation for multithreaded applications

- 8 Conclusions

Most of the programmers treat threads (even green threads in MRI) as a separate space, that they can use to simultaneously run some pieces of code. They also tend to assume, that there's no "indirect" relation between threads unless it is explicitly declared and/or wanted. Unfortunately it's not so simple as it seems...

But before we start talking about threads, you need to know what is (and a bit about how it works) Ruby method cache.

What is a Ruby global method cache?

There's a great explanation on what it is in "Ruby under a Microscope" book (although a bit outdated):

Depending on the number of superclasses in the chain, method lookup can be time consuming. To alleviate this, Ruby caches the result of a lookup for later use. It records which class or module implemented the method that your code called in two caches: a global method cache and an inline method cache.

Ruby uses the global method cache to save a mapping between the receiver and implementer classes.

The global method cache allows Ruby to skip the method lookup process the next time your code calls a method listed in the first column of the global cache. After your code has called Fixnum#times once, Ruby knows that it can execute the Integer#times method, regardless of from where in your program you call times .

Now the update: 2.1+ there's still a method cache and it is still global but based on the class hierarchy, invalidating the cache for only the class in question and any subclasses. So my case is valid in Ruby 2.1+.

Why would we care?

Because careless and often invalidation of this cache can have a quite big impact on our Ruby software. Especially when you have a multithreaded applications, since there's a single global method cache per process. And constant invalidating, will require a method lookup again and again...

What invalidates a Ruby global method cache?

There's quite a lot things that invalidate it. Quite comprehensive list is available here, but as a summary:

- Defining new methods

- Removing methods

- Aliasing methods

- Setting constants

- Removing constants

- Changing the visibility of constants

- Defining classes

- Defining modules

- Module including

- Module prepending

- Module extending

- Using a refinements

- Garbage collecting a class or module

- Marshal loading an extended object

- Autoload

- Non-blocking methods exceptions (some)

- OpenStructs instantiations

How can I check if what I do invalidates global method cache?

You can use RubyVM.stat method. Note that this will show only chanages that add/remove something. Changing visibility will invalidate method cache, but won't be visible using this ,ethod.

RubyVM.stat #=> {:global_method_state=>134, :global_constant_state=>1085, :class_serial=>6514}

class Dummy; end #=> nil

# Note that global_constant_state changed

RubyVM.stat #=> {:global_method_state=>134, :global_constant_state=>1086, :class_serial=>6516}

def m; end #=> :m

RubyVM.stat #=> {:global_method_state=>135, :global_constant_state=>1086, :class_serial=>6516}

# Note that changing visibility invalidates cache, but you won't see that here

private :m #=> Object

RubyVM.stat #=> {:global_method_state=>135, :global_constant_state=>1086, :class_serial=>6516}

public :m #=> Object

RubyVM.stat #=> {:global_method_state=>135, :global_constant_state=>1086, :class_serial=>6516}

Benchmarking methodology

General methodology that I've decided to took is similar for single and multi threaded apps. I just create many instances of an element and execute a given message. This way MRI will have do a method lookup after each invalidation.

class Dummy

def m1

rand.to_s

end

def m2

rand.to_s

end

end

threads = []

benchmark_task = -> { 100000.times { rand(2) == 0 ? Dummy.new.m1 : Dummy.new.m2 } }

I invalidate method cache by creating a new OpenStruct object:

OpenStruct.new(m: 1)

Of course this would not work for multithreaded invalidation, since first invocation (in any thread) would place info in method cache. That's why I've decided for multithreading, to declare a lot of methods on our Dummy class:

METHODS_COUNT = 100000

class Dummy

METHODS_COUNT.times do |i|

define_method :"m#{i}" do

rand.to_s

end

end

end

It's worth noting that in a single threaded environment, a huge number of new OpenStruct objects initializations can have an impact on a final result. That's why initializations were benchmarked as well. This allowed me to get the final performance difference.

Method cache invalidation for single threaded applications

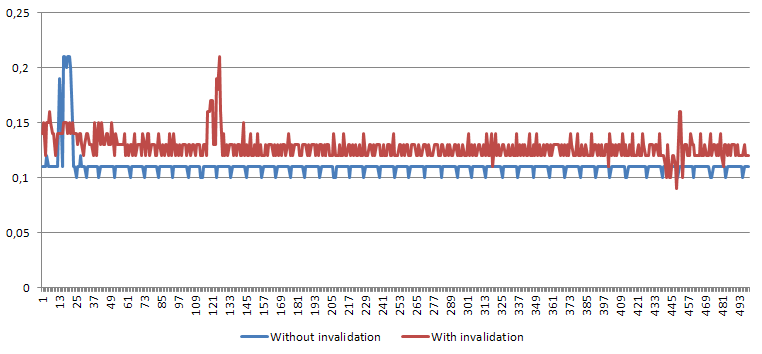

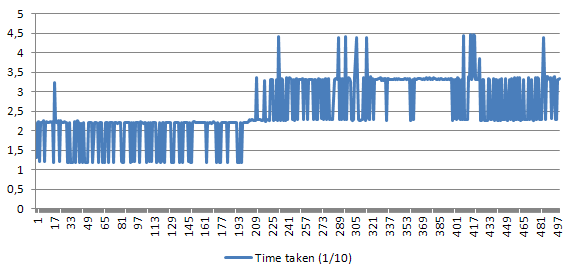

Except some CPU fluctuations (I've been running it on one of my servers), the difference can be clearly seen:

Here you can see the performance difference. Except some spikes, the difference is between 8 and 16% and the average is 12.97%.

Still, we need to remember, that this included 1 invalidation and few commands, so it doesn't exactly simulate the real app flow, where you would not have a ratio near to o 1:1 between commands and invalidations. But even then, with a pretty heavy loaded, single thread app, that uses a lot of OpenStructs or any other cache invalidating things, it might have a huge impact on its performance.

Still, we need to remember, that this included 1 invalidation and few commands, so it doesn't exactly simulate the real app flow, where you would not have a ratio near to o 1:1 between commands and invalidations. But even then, with a pretty heavy loaded, single thread app, that uses a lot of OpenStructs or any other cache invalidating things, it might have a huge impact on its performance.

Method cache invalidation for multithreaded applications

Benchmarking method cache invalidation in multithreaded environment can be kinda tricky. I've decided to have 2 types of threads and a switch:

- Benchmarked threads - threads that I would be monitoring in terms of their performance

- Invalidating threads - threads that would invalidate ruby method cache all over again

- Switch - allows to turn on/off cache invalidation after given number of iterations

Every invalidating thread looks like this:

threads << Thread.new do

while true do

# Either way create something, to neutralize GC impact

@invalidate ? OpenStruct.new(m: 1) : Dummy.new

end

end

Also I've decided to check performance for following thread combinations:

- Benchmarked threads: 1 / Invalidating threads: 1

- Benchmarked threads: 1 / Invalidating threads: 10

- Benchmarked threads: 10 / Invalidating threads: 1

- Benchmarked threads: 10 / Invalidating threads: 10

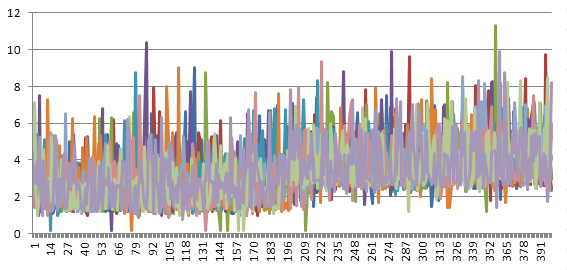

and here are the results.

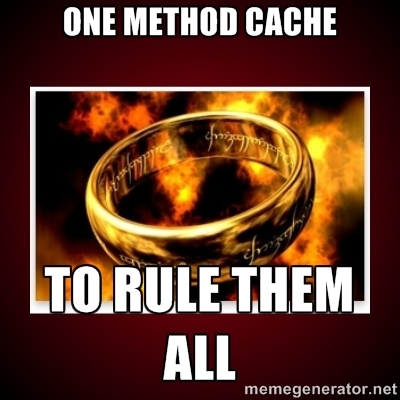

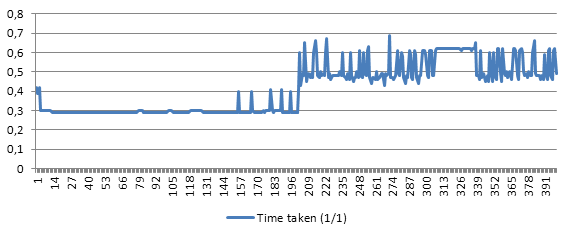

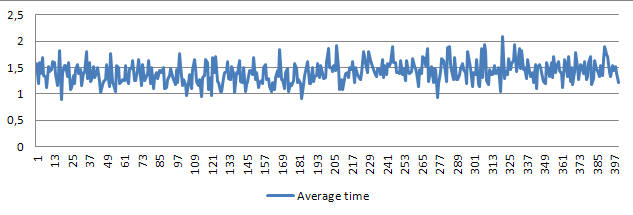

Benchmarked threads: 1 / Invalidating threads: 1

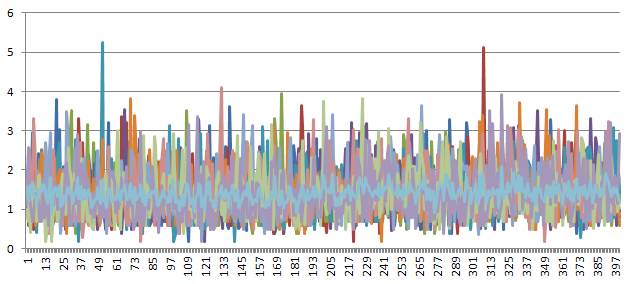

Benchmarked threads: 1 / Invalidating threads: 10

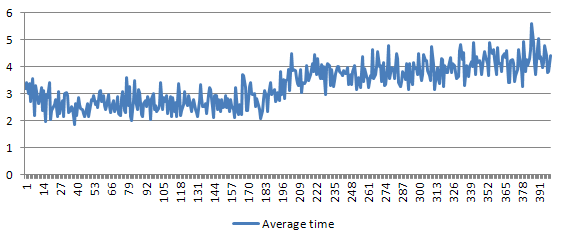

Benchmarked threads: 10 / Invalidating threads: 1

Benchmarked threads: 10 / Invalidating threads: 10

Conclusions

Even with 2 threads and when 1 of them is heavily invalidating method cache, performance drop can be really high (around 30% or even more).

The overall performance drop for multiple threads that work and multiple that invalidate is around 23,8%. Which means that if you have many threads (lets say you use Puma), and you work heavily with OpenStruct (or invalidate method cache in a different way) in all of them, you could get up to almost 24% more switching to something else. Of course probably you will gain less, because every library has some impact on the performance, but in general, the less you invalidate method cache, the faster you go.

The quite interesting was the combination 10:1. There's almost no performance impact there (around 4.3%). Why? Because after the first invocation of a given method, it's already cached so there won't be a cache miss when using method cache.

TL;DR: For multithreaded software written in Ruby, it's worth paying attention to what and when invalidates your method cache. You can get up to 24% better performance (but probably you'll get around 10% ;) ).

April 5, 2015 — 21:41

Fantastic article Maciej. Didn’t know that global cache’s impact on performance could be this much.

April 5, 2015 — 23:06

Thanks. Hope you’ve read also the conclusions in which I stated that this is a test environment and in a real app, the impact might be smaller but still with a heavy loaded app – it’s worth looking into the method cache (especially with multithreaded apps)

April 6, 2015 — 14:10

Afaik, in ruby 2.1+, the method cache is not global anymore. Which ruby version was these run on ?

April 6, 2015 — 16:28

As far as I know, in 2.1 there’s still a method cache and it is still global but based on the class

hierarchy, invalidating the cache for only the class in question and any

subclasses. So my case is valid in Ruby 2.1+. And to answer your question – I’ve been running single thread on 2.1.something (a default for my rvm) and the multi on 2.2.1. But true – worth pointing out that in the post. Thanks!

April 6, 2015 — 20:23

Github “improved version” contains per-class cache:

https://github.com/github/ruby/