Introduction

Leaving Cracow's familiar scenes behind, I headed to Warsaw with anticipation for the Ruby Warsaw Community Conference. The compact yet promising event marked a day dedicated to Ruby enthusiasts like myself. Below, you can find my after-thoughts about this peculiar event.

Speaker's Dinner and Before Party

The speakers' dinner and pre-party set the tone for the conference, offering a warm, inviting atmosphere. Both venues, a well-chosen restaurant and a lively before-party spot, facilitated great conversations and networking among the attendees.

Conference Overview

The Ruby Warsaw Community Conference stood out with its compact, one-day structure, sparking my initial skepticism about its depth. However, the event unfolded impressively, offering three engaging workshops in the morning and four insightful talks arranged in a 2:2 format (2 talks, break, 2 talks). This unexpectedly effective, concise arrangement sold out and fostered a refreshing atmosphere, free from the typical conference fatigue.

Workshops

The workshops, a well-anticipated part of the conference, included:

Game Development in Ruby on Rails: A session diving into the creative possibilities of game development with Rails.

Fixing Performance Issues with Rails: Focused on pinpointing and solving common performance hurdles in Rails applications.

Rails 8 Rapid Start: Mastering Templates for Efficient Development: Offered a deep dive into the newest Rails features, focusing on templates.

Despite not attending due to final preparations and a wish to leave spots for others, the buzz around these workshops was unmistakably positive. Their popularity hinted at a successful format but suggested room for a more inclusive approach, possibly integrating more talk sessions with workshop-style learning to cater to the high demand, as not all the attendees could secure a spot at any of the workshops.

Venue

The venue at Kinoteka, part of Warsaw's iconic Palace of Culture and Science, added a grand touch to the conference. The majestic setting, a landmark of architectural and cultural significance, provided a fitting backdrop for the event. The cinematic hall, known for its excellent acoustics and visual setup, ensured an immersive experience for speakers and attendees, complementing the event's vibrant discussions with its historical and cultural resonance.

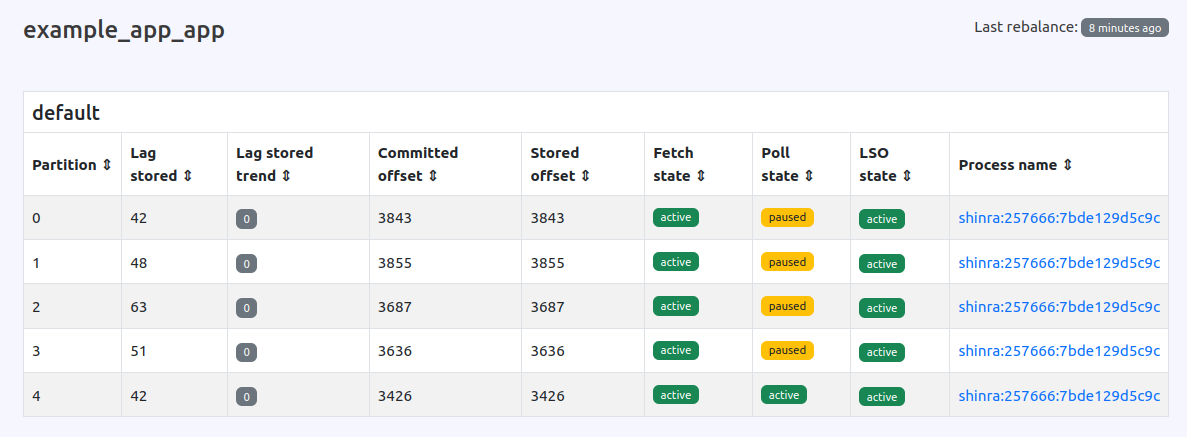

Talks

Aside from my talk (which I summarize below), there were three other talks:

- Zeitwerk Internals by Xavier Noria

- Renaissance of Ruby on Rails by Steven Baker

- Implementing business archetypes in Rails by Michał Łęcicki

Xavier Noria presenting

While you will never make everyone happy, I think that one of the rules of a good event is to have a bit of everything:

-

A technical deep dive (by Xavier Noria) - I enjoyed seeing the conference opening talk at RailsWorld. Xavier delivers. He has this fantastic ability to simplify the internals of Zeitwerk (the library that I love and admire!) and explain complex and sometimes unexpected ways of Ruby's internal code-loading operations.

-

Standup(ish) with a story (by Steven Baker) - Steven is a full-blooded comedian. He has an excellent ability to create a seemingly unrelated to Ruby and Rails story that actually reflects the core thoughts of this topic. He guided us through his career and life stories. Still, behind that, he was using this to illustrate how far we drifted away from the simplicity of building applications and how we as an industry moved towards abstract scaling, layers, and isolation, often in projects that could be done with 90% less resources. It's one of those talks that the more you think about them, the more it gets you thinking.

-

Architecture and System Design (Michał Łęcicki) - Michał provided a solid talk despite being a big scene rookie. He started nicely with a funny background story of books great to get you to sleep that (surprise, surprise) are about software and architecture and swiftly moved to discuss complex and abstract challenges of using business archetypes patterns. My only concern regarding this talk is that it was too abstract at some points. I expected more cross-references with this pattern application in the context of Ruby or Rails, especially with ActiveRecord. Though I am sure that the next iteration of this talk will include it :) Nonetheless, it was a solid debut in front of a big audience. I don't think I could present such a topic myself as my first talk ever.

-

Something "else" (Me, see below)

My Talk: Future-Proofing Ruby Gems: Strategies for Long-Term Maintenance

In my talk, I aimed to provide insights and strategies for ensuring the long-term sustainability of Ruby gems development.

When I initially prepared this presentation, it included over 160 slides, which I had to condense to fit within the allotted 30-minute slot, including time for questions. I'm happy that I stuck to the time frame perfectly, finishing in 31 minutes, which is always a stress point for me when delivering talks. It's a delicate balance, as talks can be either too short or, heaven forbid, too long. I hit the mark, even receiving a few extra minutes from the audience (a perk of being the last speaker) to cover additional material.

My talk delved into the critical topic of Ruby gems development and open-source software maintainability. While I don't anticipate that this talk will revolutionize the field, I hope it will inspire listeners to reevaluate their approaches to various engineering challenges.

A minor logistical challenge during my presentation is worth noting - no laptop stand was available, preventing me and other speakers from using speaker mode with notes and time tracking. Due to technical constraints, we had to send our presentations in advance and rely on the big screen behind us to keep track of our talk progress. This situation led to a more freestyle delivery than I had initially planned. I hope it did not adversely affect the outcome. All speakers managed to deliver engaging and informative talks.

Slides from my presentation can be found here:

https://mensfeld.github.io/future-proofing-ruby-gems

After-Party

The After-Party was a lively and memorable conclusion to the conference.

If you've ever attended a conference, you know the atmosphere. If not, go! The party continued until around 3 am, and if it hadn't been for the venue's closing time, some might have stayed even later.

Closing Thoughts

photo source: twitter.com/balticruby

The Ruby Warsaw Community Conference exceeded my expectations. Despite initial skepticism about its one-day format, the event seamlessly combined community spirit and technical depth.

Workshops and talks, including mine, offered valuable insights. The venue, set against the iconic Palace of Culture and Science, added grandeur.

The lively After-Party facilitated connections among Ruby enthusiasts. In retrospect, I recommend this conference to the Ruby and Rails community. It proves that excellence can be found in simplicity.